Photographs and videos were once trusted as evidence of reality, but that trust is crumbling in the face of powerful image generation models. In the past, a scanned receipt or a photo of a damaged Amazon delivery could serve as solid proof to get claims processed. Today, advancements in sophisticated AI models, such as Google's Gemini 2.0 and Imagen 3, alongside platforms like Midjourney, DALL-E 3, Stable Diffusion, and Flux, have dramatically increased the accessibility, speed, and realism of AI image creation, enabling a surge of convincing forgeries and raising urgent concerns for privacy, security, and societal trust.

As OpenAI noted upon releasing GPT-4o's image generation capabilities in March 2025, they deliberately built their "most advanced image generator yet" to make image creation a primary capability of their AI system [1].

In this article, we'll explore:

- The democratization of AI image generation technologies that have made creating realistic fake images accessible to nearly anyone

- Real-world examples showing how these technologies are being exploited for privacy violations, financial fraud, and the spread of misinformation

- Practical techniques to help you identify potentially manipulated or AI-generated images

- Actionable strategies to protect your personal images and information in this new era

- The emerging technological solutions and policy initiatives being developed to address these challenges

Multimodal Breakthroughs

The past two years have witnessed remarkable advancement in AI image generation. Diffusion models like Stable Diffusion XL (open-sourced in 2023) have substantially elevated photorealism and resolution quality in freely accessible tools [2]. This technological pinnacle arrived with OpenAI's GPT-4o in 2025, which seamlessly integrates sophisticated image generation within a conversational AI interface [3].

Users can generate and modify images through natural conversation with GPT-4o, refining details in real-time and creating intricate, high-resolution compositions on demand. Notably, GPT-4o effectively renders text within images and handles multiple distinct objects in complex scenes, addressing characteristic flaws (such as garbled text or malformed hands) that plagued earlier models [3]. By 2025, AI-generated images have achieved both remarkable realism and precise adherence to user specifications—representing a substantial leap that makes potential forgeries considerably more convincing than those from just 12-24 months prior.

Free, Fast, and Everywhere

Equally significant as quality improvements is the dramatic expansion of accessibility. Capabilities once requiring technical expertise or premium subscriptions are now freely available to millions. Open-source image models operate on consumer hardware or through no-cost web applications. When GPT-4o's image generation features were extended to all ChatGPT users (including those on free plans) in 2025, adoption proved explosive—over 130 million people created more than 700 million images during the first week post-launch [4].

This ubiquity enables virtually anyone with internet access to produce sophisticated synthetic images instantaneously, without financial investment or specialized knowledge. While this democratization offers substantial creative benefits, it simultaneously reduces barriers to potential misuse.

Loosening Guardrails

Early AI image generation systems (such as OpenAI's 2022-era DALL·E 2) implemented strict prohibitions against creating realistic depictions of public figures or potentially deceptive content. However, GPT-4o's release marked a significant shift as OpenAI "peeled back" certain safeguards [5]. In 2025, the company revised its policies to permit previously-prohibited outputs—GPT-4o now generates images of real public figures (including politicians and celebrities) upon request.

OpenAI characterizes this policy evolution as transitioning from "blanket refusals" toward a more nuanced approach focusing exclusively on genuinely harmful applications. Nevertheless, this relaxation of restrictions—combined with an opt-out rather than opt-in system for public figures—has raised concerns that AI could increasingly facilitate photorealistic deepfakes, potentially enabling mischief and misinformation.

Fake Damage and False Claims

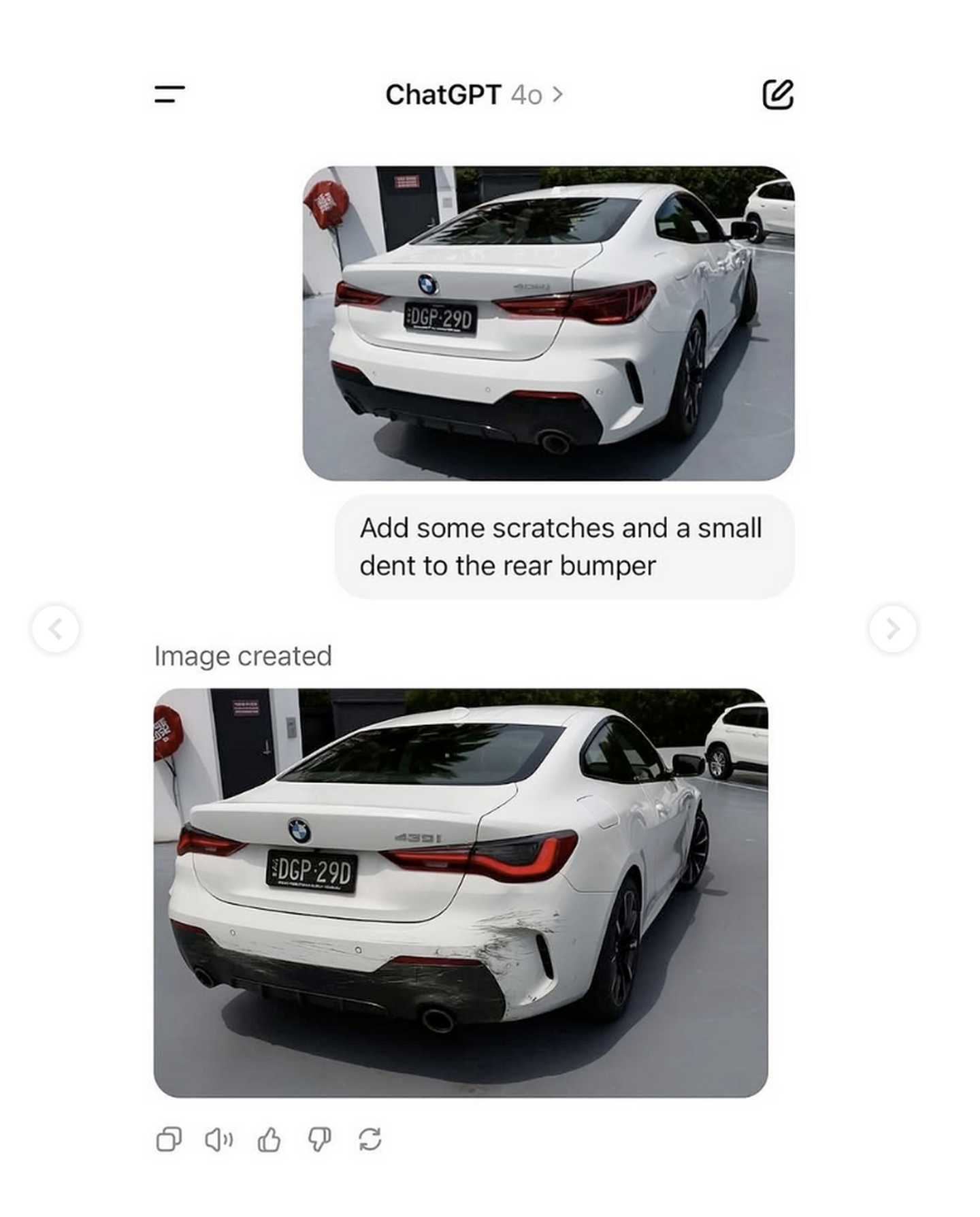

Insurance companies have traditionally relied on photographic evidence to validate damage claims. However, advanced AI image generation now enables the creation of convincing fraudulent evidence. In one documented case that gained viral attention, a user demonstrated (Figure 1) how AI could transform an undamaged car bumper photo by adding realistic "scratches and a small dent" through a simple text prompt [6].

By April 2025, security reports confirmed that individuals were actively exploiting ChatGPT's image generation capabilities to fabricate accident photos and receipts for insurance fraud. What began as creative experimentation rapidly evolved into financial exploitation, prompting one cybersecurity analyst to identify an emerging pattern of "AI-assisted insurance fraud" that presents significant challenges for insurers and financial institutions [7].

AI-Generated Receipts and Documents

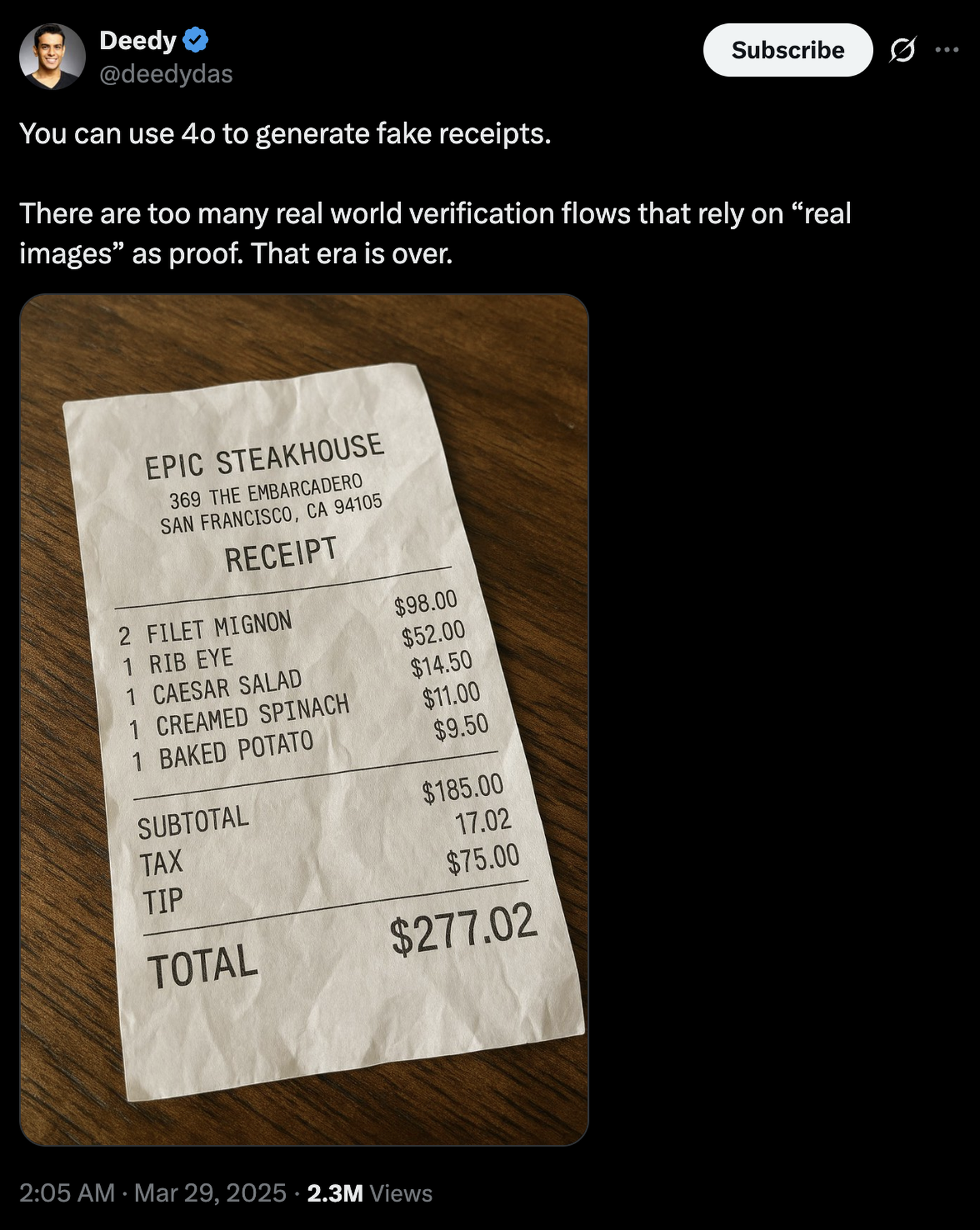

Financial departments face similar vulnerabilities with expense reimbursement processes. Demonstrations have shown AI models generating remarkably authentic-looking restaurant receipts containing (Figure 2) all expected elements—business information, itemized purchases, tax calculations, and totals—despite representing no actual transaction [8].

According to FBI reports, sophisticated scammers now leverage AI-generated images alongside synthetic text and conversational chatbots to create holistic deception ecosystems. The visual component proves particularly effective because humans instinctively trust what they can see. The emergence of perfectly rendered fraudulent documentation removes one of the traditional warning signs of deception—poor quality or inconsistent paperwork [9].

Identity Fraud and Visual Misinformation: From Fake IDs to Viral Hoaxes

Identity forgery has been revolutionized by generative AI. Creating convincing identification documents once required specialized skills; now AI systems can generate realistic ID photos and combine them with stolen personal information to create complete "synthetic identities." By 2024, the U.S. Treasury's Financial Crimes Enforcement Network (FinCEN) documented increased incidents of deepfake identity fraud, with criminals using AI-generated documents to circumvent Know Your Customer (KYC) verification systems. Even sophisticated biometric verification systems have proven vulnerable, with FinCEN reporting cases where entirely synthetic AI-generated faces successfully passed live verification checks [10].

This same technology that enables convincing identity forgery also powers the spread of viral misinformation. The potential for widespread impact was first demonstrated in March 2023 when an AI-created image of Pope Francis wearing a stylish Balenciaga puffer coat went viral across social platforms. Many viewers, including some journalists, initially believed the image was authentic, with cultural commentators later describing it as "the first real mass-level AI misinformation case" [11].

In January 2025, amid California's devastating wildfires, a series of AI‑fabricated images depicting the Hollywood Sign engulfed in flames circulated widely on platforms like X and Instagram. So convincing were these hoaxes that authorities had to publicly reassure residents that the landmark remained unscathed—even as nearby blazes were contained [12].

The common thread connecting fake IDs and viral hoaxes is the erosion of visual credibility. Whether manipulating personal identities or public perceptions, AI-generated images exploit our inherent trust in what we see, creating cascading effects that range from financial fraud to public panic and institutional distrust.

As AI-generated images become increasingly sophisticated, individuals need a comprehensive approach to both identify synthetic content and protect their personal digital presence.

Recognizing AI-Generated Images

Despite technological advances, several indicators can help identify potential fakes:

- Anatomical inconsistencies: Look for irregularities in hands, fingers, and facial features—AI still struggles with complex anatomical details

- Lighting and shadow discrepancies: Check whether shadows align logically with light sources

- Background anomalies: Examine backgrounds for blurriness, simplicity, or contextually inappropriate elements

- Text irregularities: Scrutinize in-image text for gibberish, spelling errors, or inconsistent fonts—a reliable indicator of synthetic media

- Unnatural perfection: Be wary of flawless skin textures or unnaturally symmetric features lacking the imperfections found in genuine photos

- Digital artifacts: Look for small glitches or color inconsistencies at object boundaries

Reverse image search can help determine if an image has appeared elsewhere online. However, even technical detection methods are losing effectiveness—research by Chandra et al. (2025) found detection accuracy dropped by 45-50% against the latest AI-generated images [13].

Safeguarding Your Digital Identity

Protecting your personal images requires a proactive, multi-layered approach:

- Audit your digital footprint: Regularly use reverse image search to locate where your photos appear across the web

- Implement strategic privacy settings: Configure granular controls on social platforms while recognizing their limitations—a Consumer Reports investigation found 62% of platforms retained images for AI training despite maximized privacy settings

- Practice data minimization: Before posting, the Electronic Frontier Foundation recommends asking: "Is this image necessary? Who might access it? What's the worst-case scenario if misused?"

- Deploy technical protections: Use visible watermarks on important images, explore adversarial tools like Glaze that confuse AI systems without affecting human viewing, and strip metadata containing sensitive information

- Monitor for unauthorized use: Set up alerts for your name and images using Google Alerts or specialized services like PimEyes

- Exercise your legal rights: Use GDPR's "right to be forgotten" in the EU or state-specific protections in the US, documenting thoroughly if you discover misuse

- Consider protection services: For high-risk individuals, digital identity protection services can provide comprehensive monitoring

Combating AI Image Misuse

The technological community is developing multiple approaches to address AI-generated image misuse. Detection algorithms analyze distinctive patterns not found in authentic photographs. While promising, these tools face challenges as generation techniques evolve, creating an ongoing "arms race" between detection and creation technologies. According to Deloitte Insights, the market for deepfake detection technology is projected to grow from $5.5 billion in 2023 to $15.7 billion by 2026, reflecting the urgency of this challenge [14].

Watermarking has emerged as another key strategy, ranging from visible labels to imperceptible embedded patterns detectable by specialized software. Content provenance initiatives like the Coalition for Content Provenance and Authenticity (C2PA) are developing open standards to verify media authenticity throughout its lifecycle [15]. By early 2024, Adobe reported that Content Credentials had been implemented in a "swiftly growing range of platforms and technologies," including cameras, smartphones, and editing software, with Leica releasing the world's first digital camera with built-in Content Credentials support [16]. Most experts agree that effective defense requires combining detection systems, robust watermarking, and comprehensive provenance tracking.

Regulatory Responses: Emerging Frameworks and Their Impact

Global regulatory approaches to AI image generation vary significantly in scope and effectiveness. The EU leads with its comprehensive AI Act, which requires mandatory disclosure of AI-generated content through visible labels and machine-readable marking [17]. Early evidence suggests these measures are having an impact—a 2025 Oxford Internet Institute study found a 34% decrease in unattributed AI-generated content across European platforms since implementation, with major companies like Google and Meta achieving over 90% compliance.

In the United States, regulation has emerged primarily at the state level. By 2025, 21 states had enacted laws addressing deepfakes in elections and non-consensual imagery. California's enforcement has shown particular promise, with legal action in 47 cases and successful prosecutions establishing important precedents for liability. The proposed federal DEEPFAKES Accountability Act aims to establish nationwide protections but remains pending [18].

Despite these advances, significant challenges remain. Cross-border enforcement is particularly problematic—INTERPOL and EUROPOL report that only 12% of identified cross-border AI fraud cases in 2024 led to successful legal action. Technical circumvention also threatens regulatory effectiveness, as demonstrated by University of Maryland researchers who successfully broke all tested AI watermarking systems.

The World Economic Forum projects that enhanced regulation could reduce AI-driven fraud by up to 42% by 2027, suggesting a favorable cost-benefit ratio despite adding 3-7% to business compliance costs. This indicates that while imperfect, regulatory frameworks represent a necessary component of a comprehensive response to AI image misuse.

The rapid evolution of AI image generation technology has fundamentally altered our relationship with visual information. As these tools become increasingly sophisticated and accessible, we face unprecedented challenges to privacy, security, and trust.

Addressing these challenges requires a coordinated response across multiple fronts. Technology companies must continue developing robust detection algorithms, watermarking solutions, and content provenance standards. Legal frameworks, like the EU AI Act's transparency mandates, provide important guardrails but need broader international harmonization to be truly effective.

For individuals, awareness and proactive protection strategies remain essential first lines of defense. By limiting our digital footprints, using privacy-enhancing tools, and developing critical literacy skills to question suspicious content, we can reduce vulnerability to AI-powered deception.

The democratization of image generation capabilities brings tremendous creative potential but also significant risks. As Global Witness aptly noted, the chaos wrought by unbridled generative AI was not inevitable; it is partly a result of "deploying shiny new products without sufficient transparency or safeguards" [20]. By fostering collaboration between technologists, policymakers, and an informed public, we can build systems that maximize the benefits of these powerful tools while minimizing their potential for harm.